Data Science via VS Code. Part 3: DataFrame with some basic exploratory tasks

Part 1: install, extensions, virtual env. Part 2: Initial Libraries and Data Import Whew! Data is in, virtual environment is up, and we have executed...

6 min read

Samuel Parsons

:

Aug 6, 2024 1:51:48 PM

Samuel Parsons

:

Aug 6, 2024 1:51:48 PM

If this is the first post you have opened, I recommend you jump back to the Part 1. Install VS Code, relevant extensions and create a virtual environment post. What you will find there is pretty self explanatory.

That first post was written with the intention of ‘the what' i.e. “getting it done!” - installing the baseline, and getting a virtual environment up and running. I deliberately didn’t go into the ‘why’ as I didn’t want to derail the path. If you completed Part 1 successfully, or want to know ‘the why’ first - then read on!

My background is in research and analysis in academia - I’ve trained in SPSS, R Studio, SAS, SAS EM - you get the picture. SPSS ('COMPUTE') and SAS ('PROC') are mostly self contained, although if you’ve used R or R Studio you would know about the need to download and install packages so that you can complete specific tasks (e.g. tidyverse for machine learning / data mining).

The concept of packages and libraries is the same when working with Python - instead of using R Studio to download packages and work on your machine, Python uses packages via Pip, or Conda package managers.

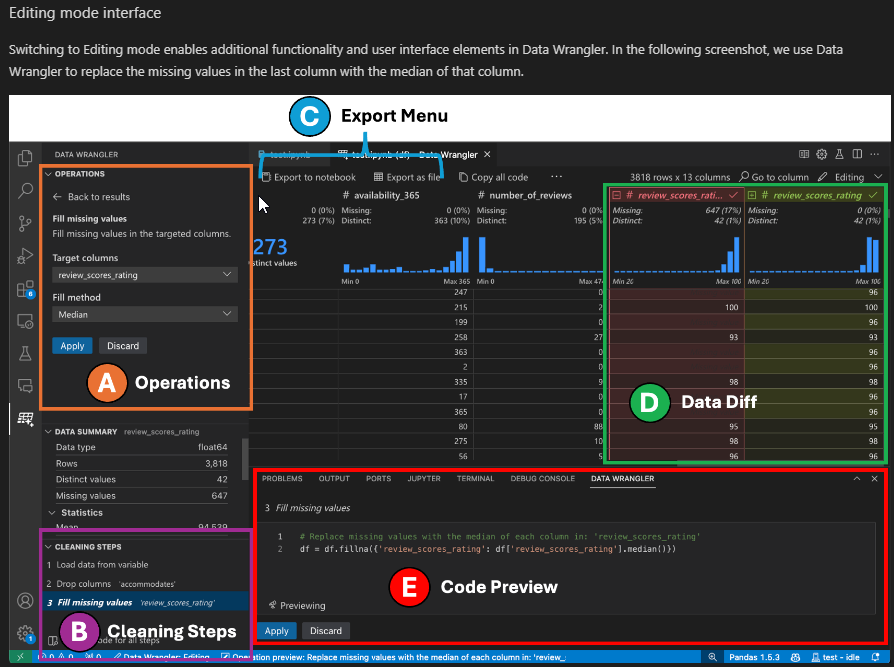

A similar concept to the packages and libraries above, VS Code Extensions extend the capability of the VS Code application. I won’t go into detail on Data Wrangler, as that will be explored later. Instead - lets look at the VS Code Python extension which extends VS Code for Python development in the following ways:

IntelliSense and Autocomplete: Provides smart code completions based on variable types, function definitions, and imported modules.

Linting: Helps you identify and fix errors in your code by highlighting issues as you type.

Debugging: Offers a robust debugging experience with breakpoints, call stacks, and an interactive console.

Unit Testing: Supports running and debugging tests from popular frameworks like unittest, pytest, and nose.

Environment Management: Easily switch between different Python environments, including virtual environments and conda environments.

Jupyter Notebooks: Provides support for Jupyter notebooks, allowing you to create and edit notebooks directly within VS Code.

You can always check the functionality by exploring the extension via the Extensions marketplace details tab. Search for Python and review the details of the extension as per below:

The environment is the combination of hardware, software, and other configurations that are used to develop, test and run software - like the python modules. The ‘Global’ environment in this context refers to the environment of your local computer (assuming you are working locally) - including the local nuances above.

The reason we use virtual environments is to allow for standardisation of the environment, allowing this same build/test/run process to be used across numerous devices and/or organisations. This is powerful as it allows you to scale across these environments very quickly, flowing natively to other products such as Azure Machine Learning Studio.

Specifically, using a Python virtual environment (venv) offers several benefits, especially for managing dependencies and ensuring project isolation. Here are some key reasons to use a venv:

Dependency Management: Each project can have its own set of dependencies, avoiding conflicts between packages required by different projects.

Isolation: A virtual environment isolates your project from the system-wide Python installation, preventing changes in one project from affecting others.

Reproducibility: You can easily replicate the development environment on different machines, ensuring that other developers can work with the same dependencies.

Version Control: You can use different versions of Python and packages for different projects, making it easier to manage compatibility issues.

No Admin Privileges Needed: You can install packages without needing administrative privileges, which is particularly useful in restricted environments.

For more information you can visit the official documentation here:

alternatively, searching for ‘python venv’ will discover some other great resources.

That’s enough ‘Why’ for now!

First things first, load up VS Code and start your virtual environment. Assuming you have just completed the previous tutorial, the project folder should be open. If not, navigate to the folder via File>Open Folder>…

Once the folder is open you should see the title of the folder (project) in the Explorer pane, with the previously created .venv items.

If you do not have a terminal open, open one via the command palette: Ctrl+Shift+P, search for terminal and select: ‘Python: Create Terminal’.

In the terminal at the bottom of the screen, note the Python terminal mention on the right hand side.

In the terminal at the bottom of the screen type .venv\scripts\activate and press enter.

You should see a green (.venv) return with the folder path, indicating the virtual environment is now active.

Note in the screen shot above there are two Python terminals open. This will appear if you had left the Python terminal open previously, and you can close these by selecting them and then selecting the Trashcan Kill icon (delete).

To work in Python, we will use a Jupyter notebook. To create a notebook - select the Data Science Exploration folder on the left hand side under the Explorer view, and select the first icon on the left ‘New File…’

Name the New File ‘Titanic Data Exploration.ipynb’ - the .ipynb is the file extension for a Jupyter notebook. Press enter and watch as the icon of the file changes to the orange symbol, and the new notebook opens.

The Notebook should appear as below:

The mention of Python in the bottom right hand corner of the Code block implies the python linter/IDE is enabled - the text block will automatically format to highlight Python functions. Lets test this by typing the following:

# This is a comment

# This is a demo Python code

def greet(name):

print(f"Hello, {name}!")

greet("Arkahna Wizard!")

The text should auto-highlight:

Click “Run All” or the Indented Run button to the left of the # This is a comment line to run the code.

You will be prompted to select a decoder in the palette - select (1): Python Environments…

Then select the Python .venv decoder (1) in the virtual environment:

Note in the screenshot above my system has a second instance of Python 3.12.4 installed - in my Global Environment. This will not be present in your system unless you have seperately installed a Global Environment version.

You will be prompted to add the ipykernel package - Install it:

The package will then execute the text, and the printed response will return below the Code block:

Note the Python decoder we selected is now named, listed on the top right hand side - .venv (Python 3.12.4). This indicates we are using the decoder to run this script.

Can you understand how the code worked?

Seems complex right?

Why don’t you try adding the following below:

print("Hello, Arkahna Wizard!!!")

For this demonstration I want to use a known, widely available dataset - the Titanic survivor data.

We can access that data via the SciKit Learn package via the Pip install process (you could use conda or similar, but I prefer Pip).

First things first: we need to install pip itself. The good news is that Pip is probably already present in your system. Most Python installers also install Pip. Python’s pip is already installed if you use Python 2 >=2.7.9 or Python 3 >=3.4 downloaded from Welcome to Python.org . If you work in a virtual environment, pip also gets installed.

‘Pip install’ is the command you use to install Python packages with the Pip package manager. If you’re wondering what Pip stands for, the name Pip is a recursive acronym for ‘Pip Installs Packages.’ There are two ways to install Python packages with pip:

Manual installation

Using a requirements.txt file that defines the required packages and their version numbers.

We will use option 1 throughout the demo, although we will create a requirements.txt file later on.

Thanks to Copilot Designer for the Pandas Python Bing Bling

'pandas is a Python package providing fast, flexible, and expressive data structures designed to make working with “relational” or “labeled” data both easy and intuitive. It aims to be the fundamental high-level building block for doing practical, real-world data analysis in Python. Additionally, it has the broader goal of becoming the most powerful and flexible open source data analysis/manipulation tool available in any language.’ - see Package overview — pandas 2.2.2 documentation (pydata.org)

Open the terminal at the bottom of your screen (Can’t find it? Ctrl-shift-p, type terminal, select python terminal and open one).

In the terminal type:

.venv\scripts\activate

This activates the virtual environment within the terminal.

In the terminal type:

pip install pandas

On pressing enter, the pandas install process will commence.

Note: this is the same process we started earlier for the ipykernel package - except that was started by installing from the pop-up window.

Once the process is complete move to the next step.

As unlikely at it is you made it this far without Pip, often you test and adjust with python you will find all sorts of interesting errors. It happens. In the unlikely event you are using an older version of Python, or were otherwise unable to install the package above, you will need to install Pip.

To install Pip visit: Pip Install: How To Install and Remove Python Packages • Python Land Tutorial

Repeat the section above and continue on

It may feel like a long road to this point! We are nearly there!

With pandas successfully installed, lets download and explore some data.

Head back to the notebook window, and hover your mouse just below where it the message ‘Hello, Arkahna Wizard!!’ was printed - you should see a + Code button. Press that.

In the new cell block, type the following:

# Load library

import pandas as pd

# Create URL

url = 'https://raw.githubusercontent.com/chrisalbon/sim_data/master/titanic.csv'

# Load data as a dataframe

dataframe = pd.read_csv(url)

# Show first 5 rows

dataframe.head(5)

Run the code by pressing the execute button to the left of the code block (1), or alternatively the Run All (2) button at the top of the notebook - see below:

The code above completes the following steps:

Load the pandas library as pd

Creates a URL object (url = path to the titanic csv)

Creates a dataframe using the pandas read_csv to read the csv file from the above url into the dataframe

Shows the first 5 rows (starting at row 0, adding a header) of the dataframe - entered above.

Congratulations! We got there in the nick of time - the blog post nearly ran out

Great work! This was a lengthy tutorial - well done for making it this far.

In this tutorial we have explored ‘the why’, introduced python notebooks, installing packages, and loaded a dataframe from a source url csv file. You can review any of these steps at any time by reviewing the content in the python note book, or exploring the pip packages e.g. pandas · PyPI to find out more.

Join me next time as we put our statistics hats on and dive into this data for some data modelling.

Continue to Part 3: DataFrame with some basic exploratory tasks

.png)

Part 1: install, extensions, virtual env. Part 2: Initial Libraries and Data Import Whew! Data is in, virtual environment is up, and we have executed...

.png)

<-- Part 3 is this way

Welcome to the mini-blog series on data science in Visual Studio (VS) Code!