Integrating OpenTelemetry with Azure Application Insights

Microsoft has been steadily investing in OpenTelemetry (OTEL), and the latest Azure Monitor and Application Insights SDKs are now built on this...

4 min read

Samuel Parsons

:

Mar 25, 2024 8:00:00 AM

Samuel Parsons

:

Mar 25, 2024 8:00:00 AM

LinkedIn announcement of the Arkahna AI/ML specilisation

The past few months have been defined by both the surge of AI-related releases and announcements internationally, coupled with the volume of Azure OpenAI projects locally. Arkahna have completed a number of Azure OpenAI projects across Government and non-Government consumers alike. The scope of these have ranged from Chat-style interfaces with internal or external focus, to larger provisioned-throughput-unit distribution of OpenAI models for bulk down-stream consumption.

Azure OpenAI Service gives customers advanced language AI including advanced models of OpenAI GPT-4, GPT-3, Codex, DALL-E, Mistral, and Whisper models with the security and enterprise promise of Azure. Azure OpenAI co-develops the APIs with OpenAI, ensuring compatibility and a smooth transition from one to the other.

With Azure OpenAI, customers get the security capabilities of Microsoft Azure while running the same models as OpenAI.

Azure OpenAI offers private networking, regional availability, and responsible AI content filtering.

Which option to take? If you are looking to boost your Business Intelligence capability by harnessing the documents and unstructured data across your organisation? Arkahna Elements OpenAI is the founding stone of AI for you.

A number of our projects have involved working with the Government of Western Australia. One example included a conversational search across a number of external facing web sites: the CMS backend database was pushed to a JSON object, before data ingestion was enabled by Azure AI Search and AI Services boosted skillsets. The resulting indexes were then made available to the orchestration platform, enabling conversational search over website data. The resulting feedback from the LLM was then formally supported with numbered citations, providing hyperlinks to the live pages. This was made available as an internal proof of concept to interested parties for limited round testing prior to wider roll out.

A second Government example included the capture and synthesis of case notes. The notes were published to a central knowledge base, that was then exported to a centrally managed green-fields Azure Subscription. The Arkahna Elements OpenAI accelerator was deployed to the subscription, with conversational tuning enabling a formal conversation regarding key behaviours and observed outcomes over given time periods. The platform utilised a larger Provisioned Throughput Unit of OpenAI model support - a centrally managed model made available for the use by WA Government by Arkahna in yet another engagement.

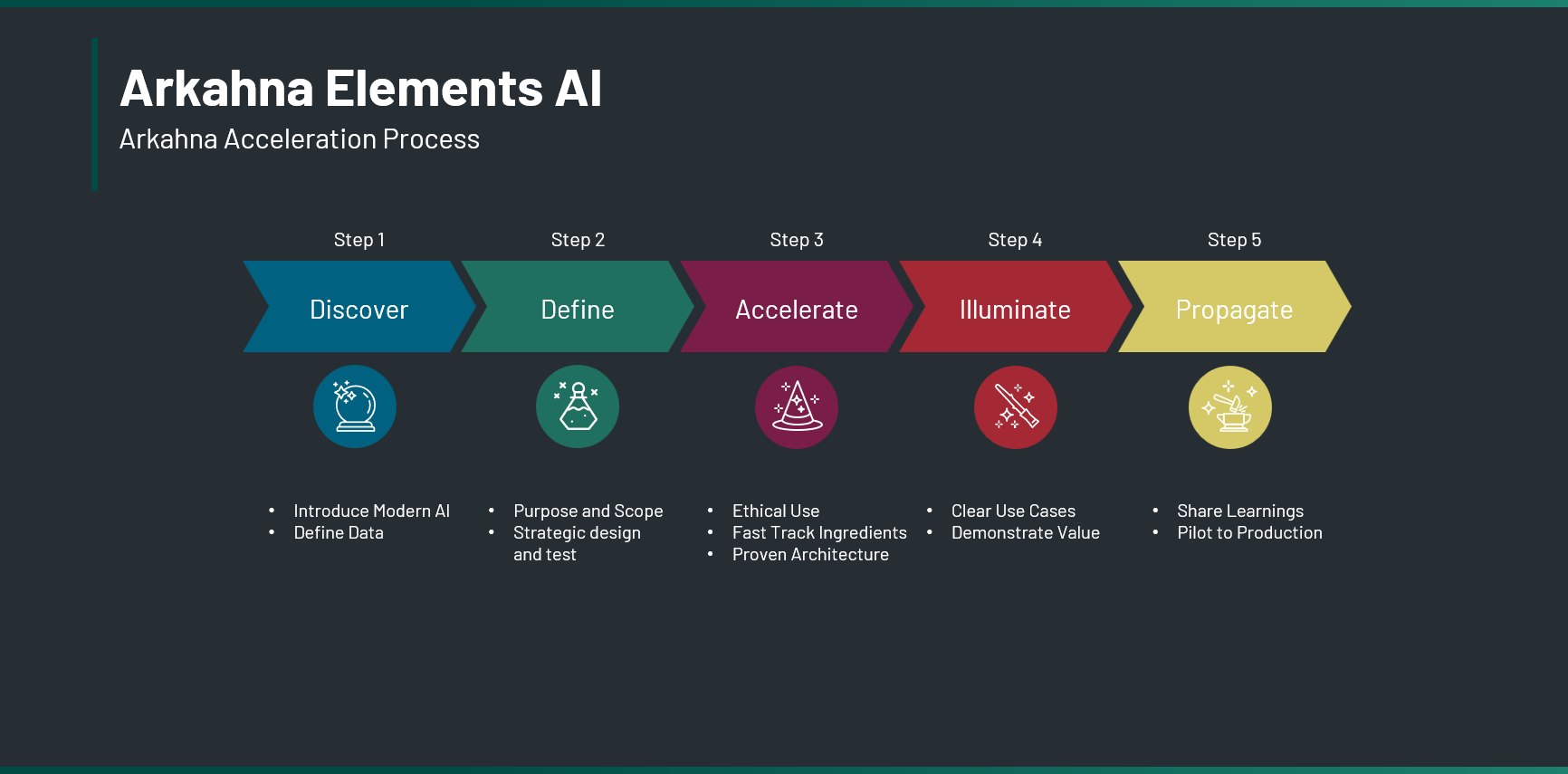

Only with the continued exposure to proof-of-concepts, pilots and emerging production workloads have Arkahna been able to establish and baseline our approach, underpinned by the Azure OpenAI Transparency Note. Our key learnings for any project include the following key actions enabling you to demonstrate the value: encourage the safe use and testing of AI across your organisation, safeguarding your corporate knowledge in the process:

Demonstrate value - quickly: focus on a safe proof of concept or pilot in the initial phases to reduce change administration board and other production-level requirements. This reduces project cost, time to testing and time to demonstrating value.

Defined, aligned outcomes: align the project with existing strategic roadmaps for innovation and uplift across your organisation: define the success criteria for your use case upfront, ensuring they align to Operation or Digital Strategies.

Innovation not limitation: remove data risks: drive a conversation of innovation - not limitation. Data governance is everyone’s business - orient your workload toward low-risk, accessible information to put the focus on the emerging functionality, not the barriers of broader access.

Utilise accelerators: the Arkahna Elements OpenAI incorporates a responsible use of AI ethos, with proven design throughout the lifecycle to provide an evidence-based architectural baseline delivered via CI/CD processes to support production workloads.

Share the learnings: the journey to production can be a windy road - connect with peers internally and externally across lessons learned and recommendations to move forward with best practice with reduced cost.

Over time our Elements AI solution has evolved a competitive user interface, functionality and stability akin to the most recent Microsoft accelerators. The on-going support for these enables your team to stay ahead.

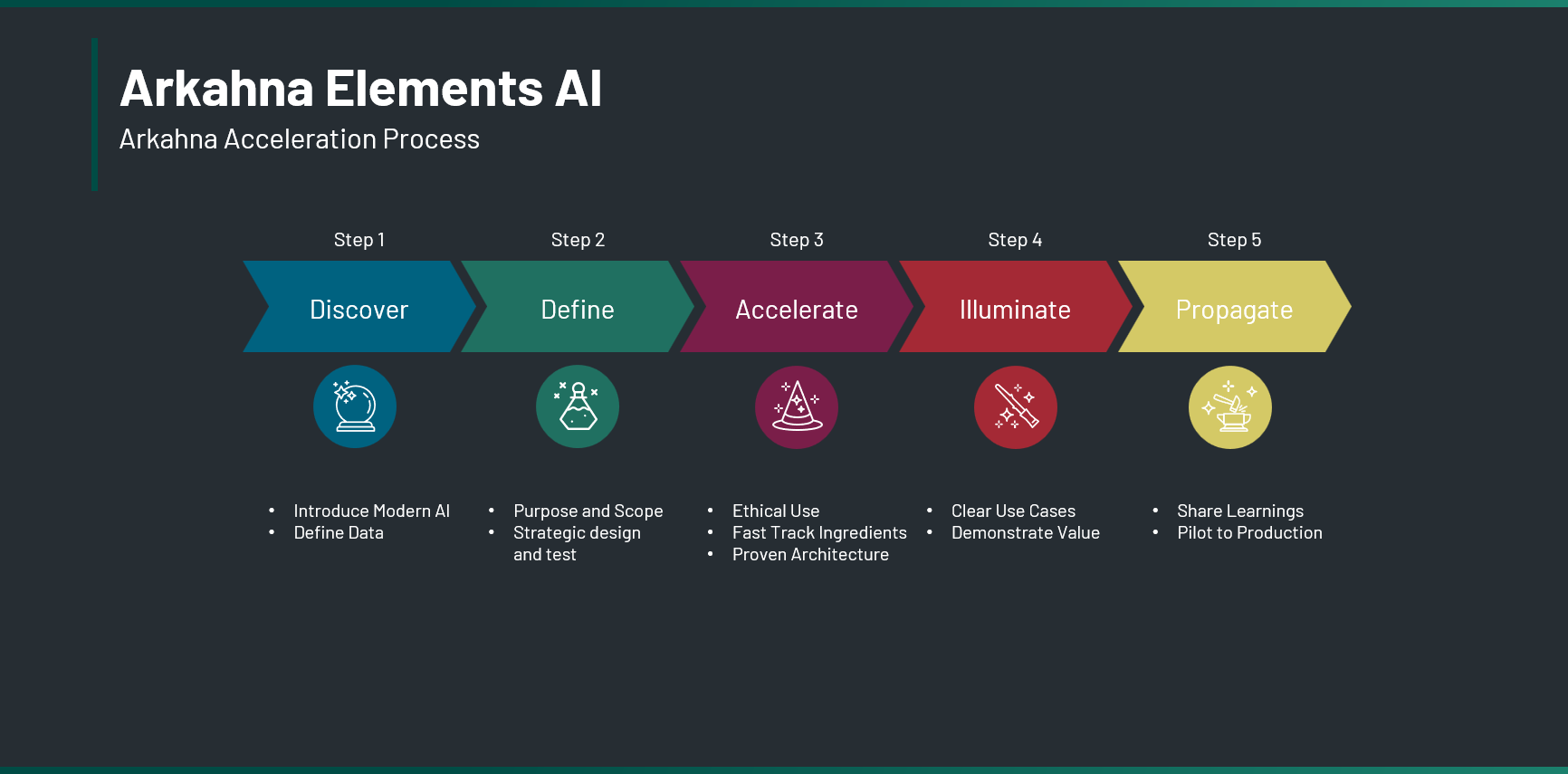

The most common request we receive is “Make it like ChatGPT - over our own data”. This is known as: Retrieval Augmented Generation (RAG), the process utilised by OpenAI Large Language Models (LLM) when providing conversation over internal data. The process starts with the conversation in the web interface, before the user prompt splits to query the underlying datasets (via Azure AI Search) before returning to the LLM for reconstruction and assembled as a worded response.

Retrieval Augmented Generation Visual - Credit: Microsoft.

This concept it well understood across the community, with the innovation now sitting between the App UX / Orchestrator and Data Source/Data Ingestion Pipeline components. Improving the former allows for a visual experience similar to the market leading interfaces, whilst the latter boosts the content and quality of the results.

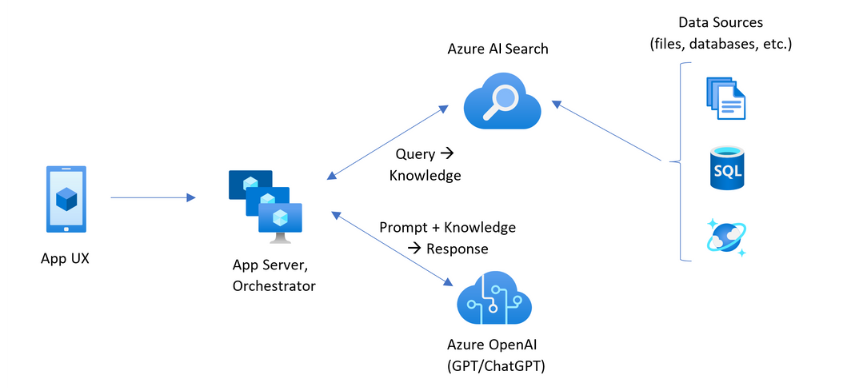

SharePoint is a great site to store corporate information - until it isn't. Free form information storage enables local teams to store their data in a way that simultaneously suits them - and mystifies the wider organisation! The native SharePoint Online Search function will only get you so far!*

*Not very far at all

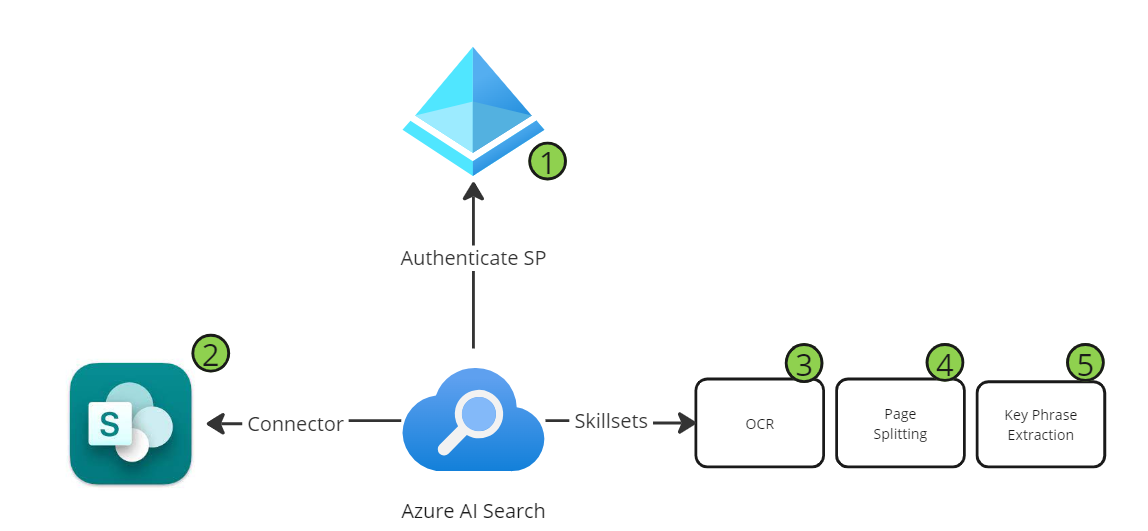

For the use case of Corporate Policies and documents - the newly released Preview SharePoint Online connector allows for connection to sites, allowing the baseline of access. The native SharePoint keyword search functionality searches across PDF titles and associated metadata. Users are often confused that the text which can be selected within a PDF from their chosen web-browser cannot be searched. This is due to the native search not incorporating Optical Character Recognition - the tooling simply cannot read the content to make it available.

Arkahna have resolved this issue by building upon the connection and data ingestion, enriching the indexes using utilising Optical Character Recognition (OCR) in the pipeline of Azure OpenAI Search. This allows for the accurate indexing of the content of files previously unsearchable within SharePoint Online search.

Arkahna have resolved this issue by building upon the connection and data ingestion, enriching the indexes using utilising Optical Character Recognition (OCR) in the pipeline of Azure OpenAI Search. This allows for the accurate indexing of the content of files previously unsearchable within SharePoint Online search.

This OCR-AI enrichment, is then combined down stream with keyphrase extraction and document page splitting to enable RAG conversation with large files regardless of your chosen model and token limitations. Our Arkahna Elements OpenAI conversational prompt includes in-built citation and insights explanation process - allowing users to self-serve data across their SharePoint Online repositories, immediately accessing the data individually or collectively summarised, without the use of the poorly equipped search engine of the past.

This OCR-AI enrichment, is then combined down stream with keyphrase extraction and document page splitting to enable RAG conversation with large files regardless of your chosen model and token limitations. Our Arkahna Elements OpenAI conversational prompt includes in-built citation and insights explanation process - allowing users to self-serve data across their SharePoint Online repositories, immediately accessing the data individually or collectively summarised, without the use of the poorly equipped search engine of the past.

The examples above demonstrate some baseline benefits of deploying and integrating Azure OpenAI across common data holdings with Azure OpenAI in business contexts. The fun really begins when the data team open the door to calling these same OpenAI models via API in their data pipelines for up /down stream management, refinement and semantic analyses of unstructured data.

For the longest time the unstructured data holdings have required costly re-writing and codifying for use in research, marketing or associated data science analytics. Semantic analysis involved journal articles, R Studio and associated classification libraries such as Rattle or Weka 3. These can (mostly) be replaced with standard model - the same model that can then provide you a written report on the findings - a post for another time…

Head over to the Arkahna Nexus - our dedicated training space. Sign up to receive more information or to add your name to the list for future training events such as our OpenAI Accelerator: 4hrs to Safe Internal AI Testing where we explore the full spectrum of Azure AI offerings and how to align them to your organisational requirements, before demonstrating a quick build retrieval augmentation model over some Star Wars data!

Microsoft has been steadily investing in OpenTelemetry (OTEL), and the latest Azure Monitor and Application Insights SDKs are now built on this...

3 min read

“GitHub Copilot is not just a developer AI assistant, it's an enabler for the entire development team; and it’s benefits will only increase with the...

As the June 30, 2024 deadline approaches, it's crucial for ISVs to seize the opportunity presented by Microsoft's "Transact & Grow" incentive program...